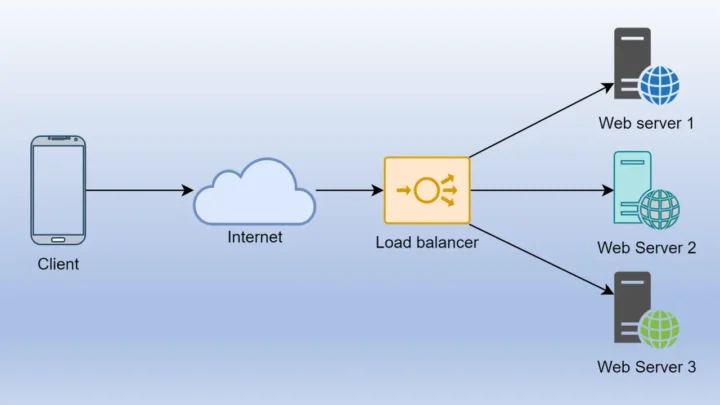

Load Balancer is a must have in any distributed system. Load balancers provide redundancy and improve application availability, reliability and responsiveness by sharing traffic across multiple back-ends.

A load balancer acts as a mediator between clients and a pool of servers, efficiently distribute incoming network traffic across multiple backend servers to ensure optimal resource utilization and prevent any single server getting overloaded.

Now what about the balancing load to other components in the system like database, cache, internal servers. To achieve full scalability, reliability we can implement load balancers at every layer based on the business need.

Behind the Scenes: How Load Balancers Work?

Load balancers employ a variety of techniques to optimize traffic distribution and deliver reliable service:

- Health Monitoring: Load balancers regularly check the health of servers in the backend pool by monitoring response times, error rates, and resource utilization. If a server becomes unresponsive or experiences issues, the load balancer swiftly removes it from the pool, ensuring that traffic is routed only to healthy servers.

- Load Distribution: Load balancers employ smart algorithms to distribute incoming requests across servers. Round-robin, weighted round-robin, least connections, and IP hash are some of the commonly used algorithms. These algorithms factor in server capacity, response times, and workload distribution to achieve optimal resource utilization.

- Session Persistence (Sticky session): To maintain a consistent user experience, load balancers can be configured to maintain session persistence. By ensuring that subsequent requests from a client are directed to the same server, load balancers preserve session data and prevent disruptions in ongoing sessions or transactions. Nice to read Choosing a stickiness strategy for your load balancer – AWS

- SSL/TLS Termination: Load balancers can offload SSL/TLS encryption and decryption processes, reducing the computational burden on backend servers. This enables servers to focus on handling application logic and enhances overall system performance.

- Content-based routing: Load balancer can route the traffic to specific backend server(s) based on specific pre-defined rules. Rules are normally designed based on content of incoming requests (URL, headers, cookies).

Hardware vs Software load balancer: Which one fits for you?

A load balancer can be physical device, a virtualized instance running on specialized hardware, or a software process.

Hardware load balancer (physical device) offers excellent scalability, advanced features, and high reliability. However, they tend to be more expensive and less flexible than software alternatives. F5 BIG-IP, Citrix ADC are some of the well-known hardware load balancers.

On the other hand, software load balancer runs on standard server hardware or in virtualized environments to provide flexibility, cost-effectiveness, and easy system integration. These LB’s are widely used in cloud-based environments and modern software-defined architectures. HAProxy, NGINX are some of the well-known software load balancers.

What are different types of load balancer?

Load balancers come in various types, each tailored to specific requirements and network architectures.

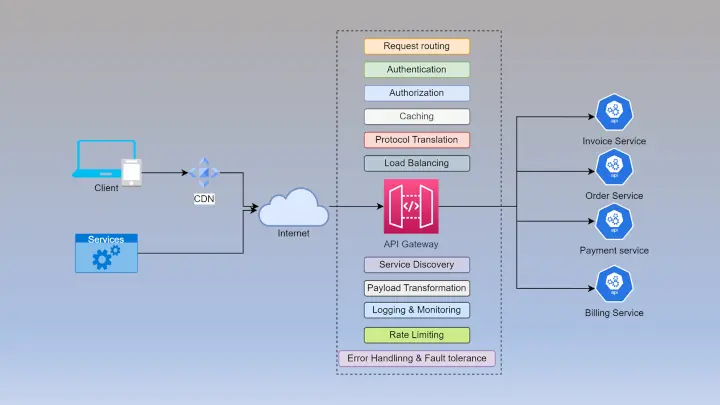

- Application Load Balancer (ALB): An application load balancer operates at Layer 7 (the application layer) of the OSI model. ALBs are capable of making routing decisions based on application-specific data, such as HTTP/HTTPS content, URLs, or headers. They offer advanced traffic management features, content-based routing, and SSL/TLS termination, making them suitable for complex web applications.

- Network Load Balancer (NLB): A network load balancer works at Layer 4 (the transport layer) of the OSI model. NLBs distribute traffic based on network-level information, such as IP addresses and ports. They are known for their high throughput, low latency, and scalability, making them suitable for TCP/UDP-based services.

- Global Load Balancer: A global load balancer operates across multiple geographic locations or data centers, allowing for optimal traffic distribution and improved availability on a global scale. These load balancers route requests based on proximity, load conditions, or other metrics to provide low latency and high availability for users worldwide.

Nice to read Load balancers should be optimized to work best for your workload | Google Cloud Blog

- DNS Load Balancer: A DNS load balancer distributes traffic by leveraging DNS (Domain Name System) to resolve domain names to multiple IP addresses. It can perform load balancing by rotating IP addresses in DNS responses or using geolocation-based DNS resolution. DNS load balancing is often used for geographically dispersed services.

- Reverse Proxy Load Balancer: A reverse proxy load balancer sits between clients and servers, accepting incoming requests on behalf of the servers. It performs load balancing by forwarding requests to the appropriate backend servers based on various algorithms or configurations. Reverse proxy load balancers can provide additional features like SSL termination, caching, and security enhancements.

It’s important to choose the right type of load balancer based on the specific needs of your application, traffic patterns, scalability requirements, and the desired level of control and flexibility. Even application can employ multiple types of load balancers together based on need.

Nice to read What is the OSI model? Real life example with file sharing

Nice to read Cloud Load Balancing overview

How to choose/design the perfect load balancer for your application?

When designing a load balancing architecture, several factors come into play:

Scalability: Load balancers must scale seamlessly as traffic volumes grow. They should be capable of dynamically adjusting server capacity and expanding the pool to accommodate increasing workloads.

High Availability: Load balancers themselves need to be highly available to avoid becoming a single point of failure. Implementing redundancy and failover mechanisms ensures continuous service availability, even in the event of load balancer failures.

Security: Load balancers serve as a vital line of defense against malicious attacks. Incorporating features like SSL termination, DDoS protection, and web application firewalls (WAFs) safeguards the infrastructure and protects against threats.

Read What is a DDoS attack? How it works? Protections

Monitoring and Analytics: Load balancers provide invaluable insights into network traffic patterns, server performance, and application behavior. Leveraging comprehensive monitoring and analytics capabilities helps optimize load balancing algorithms, identify performance bottlenecks, and facilitate proactive troubleshooting.

Takeaway 💡

- Your application has API Gateway, do you still need to consider load balancer. How to design?

- Do you need redundancy in load balancers. If not, why?

I think that you are right with this.

Thanks for your thoughts. One thing I have noticed is the fact banks and also financial institutions are aware of the spending routines of consumers and understand that most of the people max out there their cards around the getaways. They properly take advantage of that fact and then start flooding your own inbox plus snail-mail box together with hundreds of Zero APR credit cards offers immediately after the holiday season ends. Knowing that should you be like 98 of the American open public, you’ll rush at the one opportunity to consolidate credit card debt and switch balances towards 0 APR credit cards.