Consider we have a service, which is flooded with a huge number of requests suddenly, but it can only process up to a certain limit of request per second. To handle this kind of situation our service should have some kind of rate limiting mechanism which only allows requests up to a certain number within a span of time.

What is a rate limiter?

Rate limiter is a technique that regulates the number of requests that can be performed within specific time frame. The rate limiter keeps track of the number of requests made and based on predefined rules either allows throttle or block requests that exceed the defined limits. When a throttle limit is crossed, the server returns HTTP status “429 – Too many requests”

Why do we need a rate limiter?

There could be several needs for rate limiter, such as:

- Security: Rate limiting can protect the system from malicious attacks, such as denial-of-service (DoS) or brute-force attacks, by blocking or slowing down requests from suspicious sources.

- Quality: Rate limiting can also help maintain the quality of service (QoS) for legitimate users, by ensuring that the server does not get overloaded or run out of resources.

- Costs: Consider the service has an auto-scaler and to serve a sudden spike from low critical operation (like data for analytics) it will deploy new servers which will incur additional costs. Instead with the rate limiting the spike of request could have been distributed to avoid additional server spin-up.

- Revenue: Certain services might want to limit the request their customer is doing based on the tier Customer has subscribed to. To request more the customer needs to go to the upper tire which generates more revenue for the service.

Read What is a DDoS attack? How it works? Protections

What are the factors we need to keep in mind while designing/choosing a rate limiter for a system?

To design a rate limiter, we need to consider several factors, such as:

- Scope: whether it applies to a single user, an IP address, an API key, or a service.

- Time window: whether it is based on seconds, minutes, hours, days, or other units of time.

- Actions: whether it rejects, delays, or throttles requests that exceed the limit.

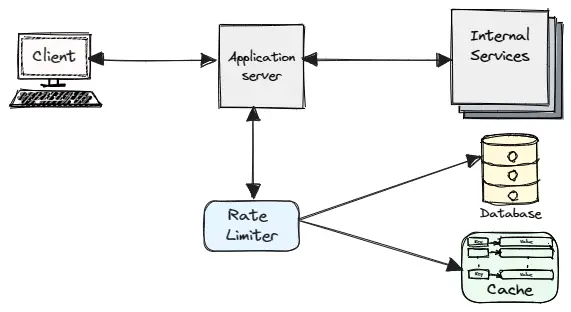

- Storage: whether it uses memory, disk, database, or distributed cache to store the information about the requests and the limits.

- Scalability: whether it can handle high concurrency and distributed systems without compromising accuracy or performance.

What are commonly used Rate Limiter Types OR algorithms?

Fixed window

- Time window is fixed like one minute as well as start and end (Ex. 07:01-07:02 and next one 07:02-07:03). Rate limiting applied within this window.

- Esay to understand and implement.

- Application can still get high traffic then expected if it comes at end of a time window and at beginning of next time window.

Sliding window

- Time window length is fixed like one minute, but the start and end keep moving (Ex. If request received at 07:02:12 then timing window for rate calculation will be 07:01:12 -07:02:12).

- Rate limiting is evenly distributed, can prevent sudden high traffic.

- Slightly more complex to implement than fixed window.

Token bucket

- Maintains a bucket of tokens with fixed capacity and tokens are consumed with each request.

- Requests can only be processed if tokens are available in the bucket. Once the bucket is empty, requests are either rejected or throttled until new tokens are added over time.

Leaky bucket

- Requests are added to the bucket, which has a fixed leak rate. If the bucket overflows, requests are either rejected or delayed.

- Smooths out request rates and provides a constant output rate.

It’s important to understand the trade-offs of each type and select the one that best suits your use case. In some cases, rate limiter is used together with manual challenge(reCAPTCHA) to distinguish between legitimate users and automated clients.

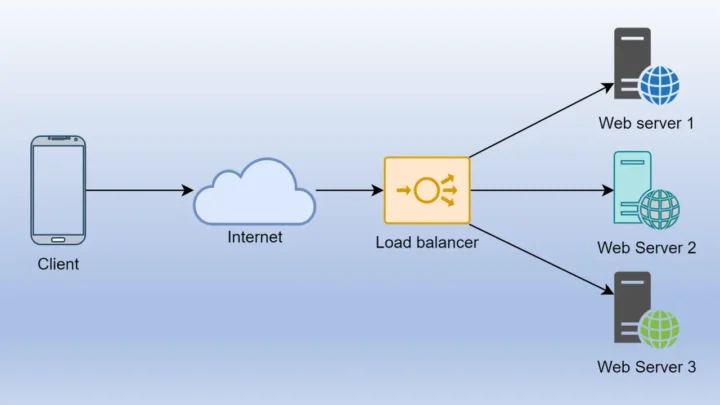

Also in the distributed system, there may be multiple rate limiter which will be load balanced. Under this scenario we may need additional techniques on top of commonly used algorithm to manage session and race conditions.

What is the best place to implement Rate Limiter?

Placement of rate limiter in your application architecture changes based on the requirements, system need. Following are some options.

- Application Code:

- Implementing the rate limiter directly within the application code allows for fine-grained control and customization.

- Suitable when rate limiting rules are application-specific or when you have a complex application architecture where rate limiting needs to be applied at different layers.

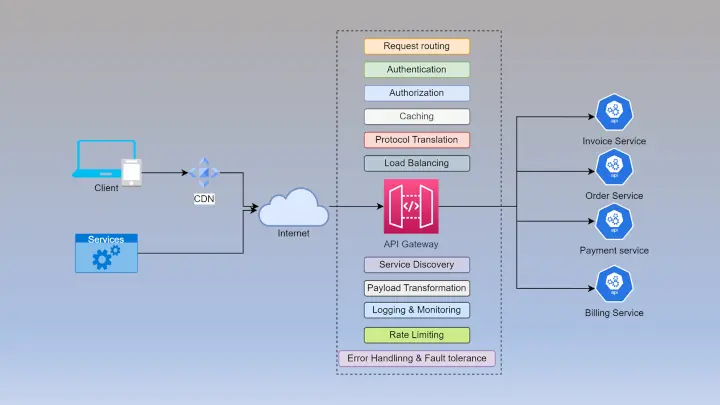

- API Gateway:

- Placing the rate limiter in the API gateway provides centralized control and management of the rate limiting policies.

- Suitable when rate limiting needs to be applied uniformly across multiple APIs or services.

- CDN (Content Delivery Network):

- Some CDNs offer built-in rate limiting capabilities, allowing you to enforce limits closer to the edge locations.

- This approach can be effective in mitigating DDoS attacks and reducing the load on your infrastructure.

- Firewall:

- Rate limiting at the firewall level can help protect your infrastructure from malicious traffic and prevent unauthorized access.

- This approach is beneficial when the goal is to control traffic at the network level before it reaches your application servers.

What are the best market available rate limiter.

The choice of the best rate limiter depends on your specific use case, programming language, scalability requirements, and integration preferences. Here are some popular rate limiter options:

- Redis: Redis, an in-memory data structure store, provides rate limiting capabilities through its Redis-based rate limiter libraries like “celluloid” or “ratelimit.lua.” Redis allows for flexible rate limiting configurations and is widely used for its high performance and scalability.

- Guava Rate Limiter: Guava, a Java library developed by Google, offers the RateLimiter class, which provides a simple and easy-to-use rate limiting solution. It supports both fixed-rate and smooth-bursty rate limiting algorithms.

- Token Bucket: Token bucket algorithms are widely used for rate limiting purposes. Libraries such as “tokenbucket” in Node.js and “go-redis-rate” in Go implement token bucket rate limiting.

- Envoy Proxy: Envoy is a modern proxy server that offers rate limiting capabilities as part of its feature set. It provides flexible rate limiting configurations and supports different algorithms like fixed window, sliding window, and token bucket.

- Nginx: Nginx, a popular web server and reverse proxy, provides built-in rate limiting functionality. It allows you to define rate limits based on various parameters and is often used as an API gateway or reverse proxy to enforce rate limits.

- AWS (Amazon Web Services):

- AWS WAF (Web Application Firewall): AWS WAF offers rate-based rules that allow you to define rate limits on incoming requests to your web applications. You can configure rate limits based on the number of requests per IP, per resource, or per origin.

- AWS API Gateway: AWS API Gateway provides built-in rate limiting functionality. You can set rate limits based on requests per second (RPS) or requests per minute (RPM) to control the flow of requests to your APIs.

- Amazon CloudFront: CloudFront, AWS’s content delivery network (CDN), allows you to set up rate limiting rules to control the request rate from different sources or IP ranges. It helps protect your infrastructure from excessive traffic or potential DDoS attacks.

- Azure (Microsoft Azure):

- Azure API Management: Azure API Management offers rate limiting policies that can be applied to your APIs. You can define rate limits based on requests per minute, per hour, or per day. It also provides features like burst limits and quota enforcement.

- Azure Application Gateway: Azure Application Gateway can be configured to include rate limiting rules for incoming requests. It supports rate limiting based on the number of requests per minute, per hour, or per day.

- Azure Front Door: Azure Front Door allows you to define rate limiting rules to control the request rate to your applications. You can set limits based on requests per second or requests per minute and customize the response behavior for exceeded limits.

- Google Cloud:

- Google Cloud Armor: Google Cloud Armor provides rate limiting capabilities as part of its security features. You can configure rate limiting rules based on the number of requests per minute, per hour, or per day to protect your applications from excessive or abusive traffic.

- Google Cloud Endpoints: Google Cloud Endpoints allows you to define rate limiting policies for your APIs. You can set rate limits based on requests per minute, per hour, or per day, and customize the response behavior for exceeded limits.

- Google Cloud CDN: Google Cloud CDN enables you to set up rate limiting rules at the edge locations to control the request rate from different sources or IP ranges. It helps protect your applications and infrastructure from traffic spikes or potential attacks.

Takeaway 💡

With rate limiter will you be able to mitigate DDoS attack? If yes, which layer of OSI Model can be protected by rate limiter.